Update (15 August 2007):

Recent email exchanges with Guy Grantham, David Tombe, Jonathan vos Post and others have led me to reconsider the presentation of ideas delivered in this blog in posts such as this and this. If you immerse yourself deeply in a particular problem, it can be difficult for others to grasp quick explanations you give. I’ll leave both those posts and add here some introductory material.

The Standard Model U(1)xSU(2)xSU(3) is defective in including U(1) for electromagnetism which has only one charge and one gauge boson (reasons explained below and in previous posts). U(1)xSU(2) requires a Higgs field to break the symmetry by giving the weak bosons of SU(2) mass at low energy, which limits their range. The Higgs field cannot make falsifiable predictions and has not been verified. In addition there are no falsifiable predictions from attempts to unify the U(1)xSU(2)xSU(3) standard model with gravitation. Instead of U(1)xSU(2)xSU(3), consider the simplicity of SU(2)xSU(3), where SU(2) is an entire electroweak-gravity unification which can be understood by the SU(2) weak symmetry in the standard model as modelling weak isospin (a handed charge), weak hypercharge/electric charge, and gravitational charge (mass) all in one, by utilising 3 weak gauge bosons which exist in both massive and massless forms. The massless forms of the W_+ and W_- gauge bosons mediate positive and negative electric force fields of electromagnetism, while the massless version of the Z_0 mediates gravity. The massive forms of these three gauge bosons are the same as those in the existing weak isospin theory. The mechanism by which mass is acquired by weak gauge bosons limits their interactions to one handedness. The simplicity of having a massless spin-1 gauge boson for gravity is demonstrated by the following mechanism:

Galaxy recession velocity: v = dR/dt = HR. Acceleration: a = dv/dt = d(HR)/dt = H.dR/dt = Hv = H(HR) = RH2 so: 0 < a < 6*10-10 ms-2. Outward force: F = ma. Newton’s 3rd law predicts equal inward force: non-receding nearby masses don’t give any reaction force, so they cause an asymmetry, gravity. It predicts particle physics and cosmology. In 1996 it predicted the lack of deceleration at large redshifts.

Above, v = HR is the Hubble law (1929) based on empirical observations; see the great page discrediting lies about “tired light” which has no empirical evidence whatsoever unlike redshift due to the recession of light sources: http://www.astro.ucla.edu/~wright/tiredlit.htm.

Now remember that when we look to bigger distances R, we’re looking back in time (sun is seen as it was 8.3 minutes ago, next star is seen as 4 years ago, cosmic background radiation – the most distant light source – is redshifted fireball radiation coming from 14,700,000,000 years ago).

Hence, the Hubble parameter H = v/R can be written better in terms of v/time, v is recession velocity and time is the time in the past past when the light was emitted, because we only know the recession velocity v as a function of time past, not as a function of distance R irrespective of time (while the starlight was in transit to us for many years, the star’s velocity will have changed!). Notice that the constant v/time is an acceleration. Specifically:

a = dv/dt = d(HR)/dt = H*dR/dt = Hv = H(HR) = (H^2)R

This acceleration is very real. It’s something like 6*10^{-10} ms^{-2} at the biggest distances, which is small compared to the accelerations we know, but is massive in terms of the amount of mass in the universe which is accelerating outward!

Newton says (2nd law): F = ma. The mass of the observable universe is approximately 10^80 hydrogen atoms or about 10^53 kg (with a factor of ten error, depending mainly on what assumptions people make about whether dark matter exists, etc.). This means that the outward force is on the order of F = (10^53)*(6*10^-10) = 6*10^43 Newtons (approximately).

That’s a big outward force, but what’s more exciting is that Newton’s 3rd law of motion says that there should be an equal inward reaction force:

F_outward = -F_inward

That means that pushing inward on every point there is 6*10^43 Newtons or whatever! The only stuff it can be in the known facts of QFT and GR is graviton type gauge boson radiation. Now imagine there is a shield called the Earth below your feet: each electron and quark has a cross-sectional area equal to its tiny black hole size, radius 2GM/c^2, which reflects gauge bosons (fermions are gravitationally trapped Heaviside energy currents, black holes). What is the effect you calculate should result from the asymmetry in this 6*10^43 N shielding by the Earth? Turns out, it predicts 10 ms^{-2} acceleration towards the earth at the surface!

Regarding the earlier post https://nige.wordpress.com/2007/06/20/the-mathematical-errors-in-the-standard-model-of-particle-physics/, fig. 4 shows radiation is the propagation of energy, which occurs in two modes:

(1) massless, electrically “neutral” (as a whole, obviously within the spatial dimensions of the photon the electric field is not neutral) radiation propagates because it goes with an electric field (electric “charge”) which is varies in strength transversely in such a way that the magnetic fields cancel out, so there is no prevention of propagation due to infinite magnetic self-inductance. That can go either with an oscillation longitudinally (like AC electricity, photons, gamma rays) or not (like DC electricity and as radiation these are gravity-causing gauge bosons).

(2) massless, electrically charged radiation propagates only when there is equal going from charge A to charge B and from charge B to charge A; this exchange makes the magnetic field curls cancel, allowing propagation.

A small sized pulse of oscillatory energy is a “real photon”. If you have radiation trapped by gravity in a small loop, the opposite travelling radiation on each side of the the loop will cancel the magnetic field curl of the other, permitting it to exist. This is how you get a fermion core, such as the core of the electron.

a) – there are two possibilities, neutral photons which can oscillate longitudinally as well as transversely (light photons, gamma rays, etc) and neutral photons which don’t oscillate longitudinally, and so only vary in strength transversely (flat-topped waves like DC, long TEM waves, or gravity-causing exchange radiation). If such radiations are trapped by gravity into a small loop, you get a “static” charge of small spatial size, radius 2GM/c^2. This looks like a “particle”.

b) – as fig4 of my reference explains, continuous emission of flat-topped transverse-only radiation (with no variation of field strengths longitudinally, unlike Maxwell’s picture of a light wave) is gauge boson radiation, which can be charged or uncharged. If charged, it causes the electric fields in space between charges. If uncharged, it causes gravity.

What is curious is the question of whether they are ignoring it 99% due to bigotry and 1% due to ignorance, or 100% due to bigotry. If the former case is true, than I can potentially help matters by continuing efforts to overcome ignorance. If the latter is true, it’s hopeless. Another question, should I be concerned at all that work is ignored? In other words, is the aim just to find out facts, or to find out and then publicise (market) the facts? The marketing of ideas is quite a different area of work from coming up with them; in particular, it must address the facts you have to the needs of potential readers of the paper, which are possibly quite different in direction from most of the things you might actually prefer to write about.

On the other hand, the basic principle of modern marketing is having a great product. String “theory” is an example of an successful marketing effort, although it should be criticised on the basis that “it” is 10^500 models, and therefore the best version of the theory is not even wrong. I don’t however think that string “theory” is a good example of modern marketing. In string “theory”, the public who get most excited are those people who turn to science as an alternative belief system to religion; religion loses credibility to them, and they believe in string instead. The High Priests at the top of the string community like Witten believe in string as the “best” option just as the ignorant disciples at the grass roots of that community. String “theory” is a great example of a financially successful modern religion involving several branches of mathematics. It’s not a good marketing success in the scientific sense, because it’s not delivering the type of science that is now most needed. Marketing cigarettes is not a good modern marketing strategy because it’s not giving people what they need: marketing an addiction to expensive products which cause an increased risk of lung and throat cancer is a poor business success. String theory is similar. It’s addictive, it destroys objectivity and replaces it by non-falsifiable faiths which lead the poor believer into a fantasy land of subjectivity; it’s based on ignorant speculations, not entirely upon solid empirical factual foundations. Proper marketing of science should succeed at some point when the factual foundations can be made clear to all, and when bigotry towards “alternative” ideas has been dispelled. God knows how long that will be, or how much effort it will take.

(End of update)

The electromagnetic gauge bosons: electricity (Heaviside energy or TEM – transverse electromagnetic – wave) energy waves are directly observable ‘gauge bosons’, because the light velocity force field which mediates electromagnetic interactions and accelerates electrons in conductors is the same thing as the gauge boson

I have added some updates to this post and want to make one point lucid at the start. The normal mechanism for energy transfer in electricity is due to the electromagnetic force field which propagates at the velocity of light. This was unknown to Maxwell, who wrote in his Treatise, 3rd ed., 1873, Article 574: ‘… there is, as yet, no experimental evidence to shew whether the electric current… velocity is great or small as measured in feet per second.’ The first evidence emerged in two years later in 1875, when Heaviside measured it experimentally, using logic signals (Morse) in the undersea telegraph cable between Newcastle and Denmark: electromagnetic energy goes at light speed. Now Maxwell knew that the physical constants of electromagnetism yield light speed, but he didn’t think that electricity – which he associated with matter in conductors – would travel at the same velocity as radiation. This is why in the same Treatise, Article 769, he wrote: ‘… we may define the ratio of the electric units to be a velocity… this velocity is about 300,000 kilometres per second.’ Maxwell was completely prejudiced (or ‘confident’, if you prefer that term) that, when a 300,000 km/s velocity comes out of electric and magnetic measurements using currents in wires to produce electric fields and electromagnets, the theory is demonstrating Faraday’s conjecture of 1846 that light is oscillations of electric and magnetic field lines. He ‘knew’ that the speed was evidence of light because that speed was already known in one other context: the velocity of light.

Problem: unknown to Maxwell, the very stuff he was measuring these electric and magnetic constants with, electricity, has the unfortunate property of going at light velocity. Or to be more precise: in an given medium (glass, plastic, air, vacuum, etc.), the velocity of light and of electricity is identical! This is exactly analogous to Yukawa’s prediction of the field meson, hailed as evidence that the muon was Yukawa’s particle, that the muon stopped the protons in the nucleus from exploding. Just as in the case of the muon-pion (meson) muddle up, the photon has been muddled up with the electromagnetic gauge boson by Maxwell (who didn’t know Yang-Mills gauge theory, the speed of electricity, the fact that electrons are accelerated by a field composed entirely of gauge bosons, etc.).

Contrary to Maxwell’s conception of electricity, the electron drift in a wire involves only conduction band electrons which have a mass of about 0.5% the mass of the wire and which move at typically 0.001 m/s, so it doesn’t have a high energy density: the kinetic energy that the electrons have is 0.5mv^2. So wire can carry about 0.5*0.005*0.001^2 = 0.000000025 Joules per kilogram via ‘electric current’ which flows at 1 mm/s, which is best emphasised by writing it out long hand. There is no significant energy there. That’s trivial energy. You don’t need to be Einstein to point out that it’s a popular lie that this electric current has anything to do with the delivery of the electric energy we use!

Gauge bosons – composing the electromagnetic force field which moves at 300,000 km/s in vacuum or nearly that in air – are what we use as ‘electricity’. This is the TEM wave or the Heaviside energy current, not electric drift current. Gauge bosons form a field comprising of transverse electromagnetic radiation which induces forces on charges, accelerating the electrons in the conductor by delivering energy! There is everything to be learned about quantum field theory by carefully studying this one macroscopic example of gauge boson radiation, which is on a scale we can cheaply and easily do experiments with. It’s interesting that in the mid-1960s, a chip cross-talk engineer tried charging up a simple capacitor (with air as the dielectric between the conductors), while measuring what occurred using high speed sampling oscilloscopes which were not available to Maxwell or Heaviside:

‘a) Energy current [gauge bosons] can only enter a capacitor at the speed of light.

b) Once inside, there is no mechanism for the energy current to slow down below the speed of light.

c) The steady electrostatically charged capacitor is indistinguishable from the reciprocating, dynamic model.

d) The dynamic model is necessary to explain the new feature to be explained, the charging and discharging of a capacitor, and serves all the purposes previously served by the steady, static model.’

The funny thing is that nobody has ever observed a ‘charge’, which is simply the name given to what is presumed (without any evidence) to be the cause of a ‘static field’. Problem is, Yang-Mills theory makes it clear that the cause of a ‘static field’ is moving exchange radiation, gauge bosons. There is no theory of a static charge which works: the classical model of the electron (which assumes it to be static) gives a radius far bigger than observed in electron collision experiments, so it is wrong. What people do is:

1. they observe an electric field.

2. they lie and claim that the observed electric field is fundamentally ‘static’, ignoring the fact it is composed of moving gauge bosons (i.e., ignoring Yang-Mills theory and the experiments quoted above).

3. they lie further and claim that the imaginary ‘static’ electric field proves the existence of ‘static’ charge, despite there being no way to actually probe the Planck scale or black hole scale for an electron.

4. they lie still further by claiming that, despite endless lying and total fantasy, they are scientists.

5. they won’t ever admit that they’re pseudoscientists (believing in a false religion which is based on lazyness, ignorance and lies). To argue with such people is like playing a game with a cheat: if you do manage to win they won’t concede defeat, they will keep arguing endlessly. You can’t win if you allow them to play the game by their bogus rules.

(For more on deducing the nature of electromagnetic gauge bosons using accurate observations of TEM waves in logic pulses, see figures 2, 3 and 4 of: https://nige.wordpress.com/2007/06/20/the-mathematical-errors-in-the-standard-model-of-particle-physics/.)

It’s exactly the way Aristarchus’ solar system was falsely dismissed: if people are prejudiced, they will interpret what they see in a lying way. For example they will interpret the appearance of the sun as a result of ‘sunrise’ resulting from motion of the sun, instead of allowing the possibility that the effect they see is just due to the daily rotation of the planet they are living on. It’s no use pointing out the facts to such people: they are too busy doing ‘useful string theory’ to listen, life is too short (because they waste time), and so on. It’s a waste of time to argue with such prejudiced bigots too much. If you explain the facts in a series of proved stages with them, they will simply have managed to forget the earlier stages by the end, and so the discussion is still inconclusive because it goes round in endless circles of repetition. They will bring up ‘objections’ which have already been answered in earlier stages, because they are genuinely biased against learning anything new unless it comes printed in Nature or some other peer-reviewed journal which they consider the final judgement. Eventually they might admit that they are confused, but they remain confident that there is some error in the facts, although they don’t know what it is! With that mentality, no progress is ever possible, because any advance can be ignored that way. Some evidence of attempted discussions are vital for the record to prove the existence of the problem and the sort of abusive which rewards stating experimental facts, but this fruitless effort to fight prejudice should not interfere with (or discourage) further progress in science:

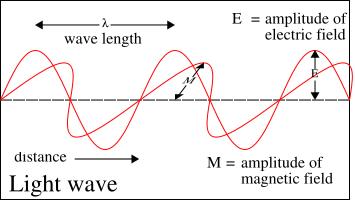

Maxwell was wrong because he showed E field strength to vary with longititudinal propagation direction in the photon, instead of showing E to vary transversely: he drew a longitudinal wave and only one of the three dimensions on the diagram is a physical dimension, since the other two dimensions are used in the diagram to show relative electric and magnetic field strengths along the propagation direction, not to show spatial dimensions. Even if you claim that the E and B field strengths are in transverse directions, they are still not varying transversely in Maxwell’s diagram, they are constant transversely and only varying as a function of longitudinal (propagation) direction. So it’s still a longitudinal wave being passed off as a transverse wave by obfuscation.

Above: the false Maxwellian light wave (illustration credit: Wikipedia). This illustration of an electromagnetic wave was included in the 3rd edition of Maxwell’s Treatise, published in 1873. Notice that there is no transverse variation at all, just a longitudinal variation. Maxwell did not label his diagram like this one, and was probably confusing the sine wave lines (which are amplitudes, not field lines) with field lines of the sort Faraday suggested were the basis of light (Faraday’s 1846 paper, Thoughts on Ray Vibrations). The only variation in the amplitude of the electric field strength which Maxwell shows is one with respect to longitudinal direction, like a longitudinal sound wave, where the intensity (pressure) varies as a function of the direction of propagation, not transversely:

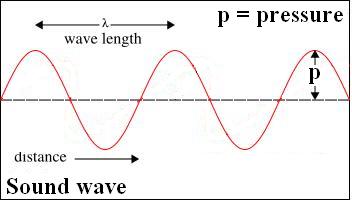

Above: sound wave with longitudinal oscillation similarly to Maxwell’s longitudinal light wave. Although the sine wave line looks as if it is a ‘transverse’ wiggle, don’t be deceived: it’s a graph with only one distance axis, the other (vertical in this case) axis is not height of wave but is pressure along the distance dimension. So Maxwell fooled himself by mistaking the axes of his graph which are field strengths for spatial dimensions, which they aren’t (any more than this graph of sound wave pressure is a transverse wave!).

The waving lines are not electric and magnetic field lines, moving transversely. They are not field lines at all, they are merely lines marking the intensity of the field.

If you draw a line of intensity and B and intensity of E varying as a function of one axis, then what you are plotting is a longitudinal wave. People mistake this for field lines vibrating transversely!

To show a transverse wave, you have to have E and B varying as a function of transverse distance, not as a function of longitudinal distance. Hence, you have to change the propagation direction 90 degrees, in these diagrams.

The two perpendicular sine waves labelled E- and B-fields are usually mistaken for E and B field lines oscillating in transverse z and y dimensions; but they are not. They are lines representing the intensity of the fields, not the field lines. The strength of a field is represented not by wiggling of field lines, but instead by the number density of field lines (just as in pressure maps: where the larger the number imaginary isopressure lines which occur in a unit area, the higher the rate of change of pressure being represented, so the faster the winds).

In order to correct Maxwell’s diagram to reality, you need to rotate it by 90 degrees so that it is a transverse wave, propagating in a direction at right angles the directions along which the field strengths vary, i.e., the positive and negative fields should propagate side by side with one another, rather than one behind the other as the Faraday/Maxwell false model shows.

All of this depends on the nature of the electromagnetic photon. In path integrals:

‘Light … ‘smells’ the neighboring paths around it, and uses a small core of nearby space. (In the same way, a mirror has to have enough size to reflect normally: if the mirror is too small for the core of nearby paths, the light scatters in many directions, no matter where you put the mirror.)’ – R. P. Feynman, QED, Penguin, 1990, page 54.

The electromagnetic field energy exists everywhere in the vacuum, but it’s only observable if we can detect an asymmetry: magnetic fields are asymmetries in the usually balanced curls which cancel each other, positive electric fields are asymmetries in the normal balance of positive and negative field energy, etc.

Now, when you get near actual matter, the field intensity from any ‘static charge’ (trapped dynamic field) associated with the matter affects the light because it adds to one of the field components in it.

So sending a photon (electric and magnetic fields) through the electric and magnetic fields of matter gives a smooth effect on large scales (slowing down, refraction) that you can calculate easily from path integrals (see Feynman’s QED book) if your photon travels through electric fields weaker than 1.3*10^18 v/m. If however, the photon is of short wavelength, it is less likely to be scattered even by a strong electric field, and will then penetrate much closer to a fermion, where it may then encounters a pair of virtual fermions created by pair production in the vacuum by electric fields exceeding 1.3*10^18 v/m (Schwinger’s threshold). So gamma rays undergo different interactions from the usual scattering. If you want to know why light bends on the presence of gravitating mass, this post (figures 1, 2, 3 and table 1) deals with mass and gravity while this post (figures 2, 3, 4) explains gauge bosons and photons and how they are physically related to each other.

Massive electrically neutral bosons, like ‘Higgs’ bosons in some ways, provide mass. However it is not done in the way of the usual symmetry breaking of U(1)xSU(2) into U(1) at low energy; instead the correct symmetry group is SU(2) and its 3 gauge bosons exist in massless forms at low energy which cause electromagnetism and gravity, and one handedness of them gets supplied with mass at high energy, when they are massive weak gauge bosons). This has a rest mass of 91 GeV, which gives rise to the massive weak bosons at high energy. At low energy, these neutrally charged, massive bosons cause smooth deflection of light by gravity because their electromagnetic fields (they contain electromagnetic fields, like a ‘neutral’ photon) interact with passing photons. The more energy the passing photon has, the more strongly it interacts. The ‘Higgs’ interacts with both electromagnetic and gravitational field; neutral and charged gauge bosons. This is why it gives rise to mass the way we observe mass. This makes a great many checkable predictions: it predicts the masses of all observable fermions.

Above: Gauge boson mechanism for gravity and electromagnetism from SU(2) with three massless gauge bosons; the massive versions of the same gauge bosons provide the weak force. For a larger version (easier to read the type), please refer to Figs. 2, 3, and 4 in the earlier post here.

Energy Conservation in the Standard Model

Renormalization is explained by the running couplings, the varying relative charge for the same type of force in collisions at different energies, i.e., different distances between particles (the higher the collision energy, the closer the particles approach before being stopped and deflected by the Coulomb repulsion).

As explained in various previous posts, e.g. here, the relative strength of electromagnetic interactions increases by 7% as the collision energy increases from about 0.5 MeV to about 80 GeV, but the strong force behaves differently, falling as energy increases (except for a peaking effect at relatively low energy), as though it is being powered by vacuum effects caused by energy shielded from the electromagnetic force due to the radial polarization of virtual fermions, created by pair production in the Dirac sea above the IR cutoff (see Fig. 1).

Fig. 1 – How the renormalization group running couplings for the strong and electromagnetic forces are related. The weak force is not shown; it is similar to the electromagnetic forces except that it is mediated by massive gauge bosons which give it a short range and a weak strength. Notice that at high energy the electromagnetic running coupling (relative electric charge) increases until it reaches the grain size of the vacuum (called the ‘UV’ cutoff energy, which isn’t shown here), while the strong coupling falls towards zero. The mechanism is that the energy, sapped from the electromagnetic field by the radially-polarized virtual fermion pairs in the vacuum, goes into short ranged virtual particles and powers the strong force. Calculating this energy is easy from electromagnetic theory, and allows the short-range nuclear force couplings to be calculated by subtraction. All you have to do is keep accurate accounts for what energy is being used for.

Fig. 1 ignores the speculative theory of supersymmetry which is based on the false guess that, at very high energy, all force couplings have the same numerical value; to be specific, the minimal supersymmetric standard model – the one which contains 125 parameters instead of just the 19 in the standard model – makes all force couplings coincide at alpha = 0.04, near the Planck scale. Although this extreme prediction can’t be tested, quite a lot is known about the strong force at lower and intermediate energies from nuclear physics and also from various particle experiments and observations of very high energy cosmic ray interactions with matter, so, in the book Not Even Wrong (UK edition), Dr Woit explains on page 177 that – using the measured weak and electromagnetic forces – supersymmetry predicts the strong force incorrectly high by 10-15%, when the experimental data is accurate to a standard deviation of about 3%.

This error is caused by the assumption that the strong coupling is similar to weak and electromagnetic couplings near the Planck scale; instead, it is much smaller, so the extrapolation of the supersymmetry unification calculation to lower energies yields a falsely high prediction of the strong force coupling constant. Supersymmetry is totally wrong. It is not science because nobody knows the values of all the 125 parameters the theory needs. It can’t predict the masses of the supersymmetric partners it requires (an unobserved supersymmetric bosonic partner for every observed fermion and a supersymmetric fermion partner for every observed boson in the universe), so it makes no falsifiable prediction at all (except from the false prediction mentioned by Dr Woit). It has no mechanism. There is no evidence to support supersymmetry, there is no mechanism to support supersymmetry, there is no science in supersymmetry. The idea it is based on, that all force couplings have a similar value near the Planck scale, is groundless ‘grand unified theory’ hot air, lacking any physics.

It’s interesting how the potential energy of the various (strong, weak, electromagnetic) fields varies quantitatively as a function of distance from particle cores (not just as a function of collision energy).

The principle of conservation of energy then makes predictions for the variations of different standard model charges with distance.

I.e., the strong (QCD) force peaks at a particular distance.

At longer distances, it falls exponentially because of the limited range of the massive pions which mediate it.

At much shorter distances (where it is mediated by gluons) it also decreases.

How does energy conservation apply to such ‘running couplings’ or variations in effective charge with energy and distance?

This whole way of thinking objectively and physically is ignored completely by the standard model QFT. As the electric force increases at shorter distance, the QCD force falls off; the total potential energy is constant; the polarized vacuum creates QCD by shielding electric force. This physical mechanism makes falsifiable predictions about how forces behave at high energy, so it can be checked experimentally.

Fig. 2 – Qualitative (not to scale) representation of Mainstream theory (M-theory) groupthink which contradicts empirical data, which lacks mechanisms, and which doesn’t accomplish any falsifiable predictions because the 6 curled up extra dimensions in the Calabi-Yau manifold have unknown sizes and shapes since they are supposed to be rolled up into an unobservably small volume. Without knowing the parameters for these 6 extra dimensions, there is no particular M-theory, just 10^500 possibilities, which means 10^500 theories all with different physics. This is so vague it is non-falsifiable at present and so at present it is not even wrong, according to Woit. Stringers should therefore shut up about string theory and give other alternatives which do make falsifiable predictions some chance to make themselves heard over the stringy hype noise level. Again, the weak force is not shown in the diagram: it is generally fairly similar to electromagnetism although it has a different strength and a limited range. Notice that in the diagram, both gravity and electromagnetism have a constant residual charge at low energies (i.e., mass as gravitational charge, and electric charge). The strength of the gravity charge at low energy is about 10^40 times weaker than electromagnetism, so gravity is exaggerated in this diagram, just to show it exists at low energy.

Comparing Fig. 1 to Fig. 2, and you see the difference between hard physical reality with experimental evidence to back it up (see last half-dozen blog posts, for evidence), and Platonic fantasy. Fig. 2, M-theory, is really in the Boscovich tradition: an attempt to unify all forces by mathematical means without a paradigm shift or any increase in physical understanding of the forces.

Now that quantum gravity is reasonably well sorted out, in my spare time I will write a paper on the Standard Model and its correct unification with gravity (see previous six posts on this blog for the basic evidence). The main challenge now is to produce complete quantitative calculations for force unification by this mechanism, making additional predictions to those already made (and confirmed), and comparing the results to experimental data already available. One interesting mathematical innovation worth mentioning, which may be helpful in dealing with relationships between quarks and leptons at very high energy, is category theory, which deals with transformations. Kea (Marni Sheppeard) is an expert on this.

Update (22 July): Not Even Wrong has news that the SU(2) isospin charge of the weak force exhibits confinement properties:

‘There’s a potentially important new paper on the arXiv from Terry Tomboulis, entitled Confinement for all values of the coupling in four-dimensional SU(2) gauge theory. Tomboulis claims to prove that SU(2) lattice gauge theory has confining behavior (area law fall off of Wilson loops at large distances) for all values of the coupling at the scale of the cutoff, no matter how small. This conjectured behavior is something that quite a few people tried to prove during the late seventies and eighties, without success. Tomboulis is one of the few people who has kept seriously working on the problem, and it looks like he may have finally gotten there. The method he is using goes back to work of ‘t Hooft in the late 1970s, and involves considering the ratio of the partition function with an external flux in the center of SU(2) and the partition function with no such flux. For a recent review article about this whole line of thinking by Jeff Greensite, see here. For shorter, less technical articles by Tomboulis about earlier results in the program he has been pursuing, see here, here, and here.’

It is well known that the SU(3) strong force has confinement properties (confining quarks in hadrons), but the fact that SU(2) may do this job is interesting, and makes the relationship between SU(2) and SU(3) clearer. There are several recent posts on this blog about SU(2).

One successful way to introduce gravity to the standard model is to change the interpretation of the standard model by using a version of SU(2) which produces electromagnetism, weak forces and gravity. This can occur if the 3 gauge bosons can acquire mass according to handedness, or not acquire mass. Those that acquire mass from a suitable mass-giving field become the 3 weak force gauge bosons, with weak strength and limited range. Those that don’t acquire mass are 3 massless gauge bosons; one uncharged (photon-like) and two charge radiations which cannot propagate by themselves since they are massless and have infinite self-inductance. These two charged radiations are gauge boson exchange radiation, mediating positive and negative fields respectively. They can only propagate in two opposite directions at the same time (i.e., continuing exchange of radiation between charges), so that the magnetic field curls get cancelled out, as explained by Figures 2 and 4 of an earlier post, here. The massless uncharged photon is the spin-1 (push and shove) graviton, predicting gravity.

The SU(3) force is similar in a strange way to this model for SU(2) as an electro-weak-gravity. SU(3) has both direct mediation of strong forces via colour charged gluon exchange radiation (this binds quarks into hadrons), and indirect (longer range) mediation of strong forces via mesons like pions (this binds hadrons into nuclei against the repulsive Coulomb force that exists between protons).

So you get two types of forces created by SU(3): small-scale, directly-mediated, gluon forces and indirectly-mediated, longer range meson forces. Similarly, for SU(2) we have two types of forces: three massive gauge bosons mediating short-ranged weak nuclear interactions, and three massless versions mediating long-range (inverse square law) electromagnetism and gravitation. There is a nice economy here, ‘two force systems for the price of one’ in both SU(2) and SU(3) symmetry groups. (Quite the opposite of string theory, where you get zero real physics in return for infinite mathematical complexity.)

Update (24 July): Dr Peter Orland has made a comment to this post about confinement. Confinement under a short ranged force such as the colour force in SU(3) is represented by the increase in colour charge as quarks move apart: this means that they are confined because the attractive charges increase as they move apart, slowing them down (higher energies in Figs 1 and 2 correspond to smaller distances between quarks). Over a range of distances between quarks, this variation in effective charges means that the charge variation with distance offsets the inverse square law (all forces, including the short ranged strong force, is proportional to the product of the coupling constants or charges involved in the interaction, divided by the square of the distance, although unlike the long range gravity and electromagnetism force laws, the coupling constant or relative charge is not constant but varies with distances and falls exponentially to zero at long ranges in short-ranged nuclear interactions). This allows quarks asymptotic freedom to move about within a certain volume. If quarks stray too far, the attractive strong force predominates, and the quarks are pulled back, and confined. There is also the effect that the vast amount of energy you need to knock a quark out of a hadron exceeds the amount of energy needed to create a new quark-antiquark pair, so instead of getting a free quark isolated, you end up creating a new hadron instead. That’s why quarks can’t be isolated. Here are some more details on the physics for electromagnetism and for SU(3):

‘The Landau pole behavior of QED is a consequence of screening by virtual charged particle-antiparticle pairs, such as electron–positron pairs, in the vacuum. In the vicinity of a charge, the vacuum becomes polarized: virtual particles of opposing charge are attracted to the charge, and virtual particles of like charge are repelled. The net effect is to partially cancel out the field at any finite distance. Getting closer and closer to the central charge, one sees less and less of the effect of the vacuum, and the effective charge increases.

‘In QCD the same thing happens with virtual quark-antiquark pairs; they tend to screen the color charge. However, QCD has an additional wrinkle: its force-carrying particles, the gluons, themselves carry color charge, and in a different manner. Roughly speaking, each gluon carries both a color charge and an anti-color charge. The net effect of polarization of virtual gluons in the vacuum is not to screen the field, but to augment it and affect its color. This is sometimes called antiscreening. Getting closer to a quark diminishes the antiscreening effect of the surrounding virtual gluons, so the contribution of this effect would be to weaken the effective charge with decreasing distance.

‘Since the virtual quarks and the virtual gluons contribute opposite effects, which effect wins out depends on the number of different kinds, or flavors, of quark. For standard QCD with three colors, as long as there are no more than 16 flavors of quark (not counting the antiquarks separately), antiscreening prevails and the theory is asymptotically free. In fact, there are only 6 known quark flavors.’ – http://www.answers.com/topic/asymptotic-freedom?cat=technology

As far as SU(2) confinement is concerned, a meson contains a quark-antiquark pair, and this is due to SU(2) isospin. The evidence in the previous half dozen posts is that SU(2) gauge bosons without mass are electromagnetism and gravity, replacing U(1). The atom is an example of the lack of electromagnetic confinement: the electron can be isolated simply because the energy needed for pair production of leptons is lower than the binding energy of an electron to an atom. This is because the electric charge doesn’t increase as the electron-proton distance increases. (For quarks, the opposite is the case: the pair production energy for quark-antiquark pairs is lower than the energy needed for a quark to escape from a hadron. Hence, the fact that quarks are confined and can’t ever be isolated in nature is a purely quantitative result due to the increasing charge with distance and fact that the quark binding energy is bigger than the quark pair production energy. The fact that electrons can escape from atoms individually is just due to the lower binding energy of electrons in atoms, and the fact that the attractive electromagnetic force between electrons and protons falls instead of increasing as distance increases.) Gravity also comes out of this SU(2) with massless gauge bosons. Gravity tends to confine masses into lumps because it is always attractive.

Copy of a comment intended for Not Even Wrong blog, which unfortunately contained a typing error and was deleted:

It appeared in the March 1 1974 issue with the title Black Hole Explosions?. Taylor’s paper (with P.C.W. Davies as co-author) arguing that Hawking was wrong appeared a few months later as Do Black Holes Really Explode?

This idea that black holes must evaporate if they are real simply because they are radiating, is flawed: air molecules in my room are all radiating energy, but they aren’t getting cooler: they are merely exchanging energy. There’s an equilibrium.

Moving to Hawking’s heuristic mechanism of radiation emission, he writes that pair production near the event horizon sometimes leads to one particle of the pair falling into the black hole, while the other one escapes and becomes a real particle. If on average as many fermions as antifermions escape in this manner, they annihilate into gamma rays outside the black hole.

Schwinger’s threshold electric field for pair production is 1.3*10^18 volts/metre. So at least that electric field strength must exist at the event horizon, before black holes emit any Hawking radiation! (This is the electric field strength at 33 fm from an electron.) Hence, in order to radiate by Hawking’s suggested mechanism, black holes must carry enough electric charge so make the eelectric field at the event horizon radius, R = 2GM/c^2, exceed 1.3*10^18 v/m.

Schwinger’s critical threshold for pair production is E_c = (m^2)*(c^3)/(e*h-bar) = 1.3*10^18 volts/metre. Source: equation 359 in http://arxiv.org/abs/quant-ph/0608140 or equation 8.20 in http://arxiv.org/abs/hep-th/0510040

Now the electric field strength from an electron is given by Coulomb’s law with F = E*q = qQ/(4*Pi*Permittivity*R^2), so

E = Q/(4*Pi*Permittivity*R^2) v/m.

Setting this equal to Schwinger’s threshold for pair-production, (m^2)*(c^3)/(e*h-bar) = Q/(4*Pi*Permittivity*R^2). Hence, the maximum radius out to which fermion-antifermion pair production and annihilation can occur is

R = [(Qe*h-bar)/{4*Pi*Permittivity*(m^2)*(c^3)}]^{1/2}.

Where Q is black hole’s electric charge, and e is electronic charge, and m is electron’s mass. Set this R equal to the event horizon radius 2GM/c^2, and you find the condition that must be satisfied for Hawking radiation to be emitted from any black hole:

Q > 16*Pi*Permittivity*[(mMG)^2]/(c*e*h-bar)

where M is black hole mass. So the amount of electric charge a black hole must possess before it can radiate (according to Hawking’s mechanism) is proportional to the square of the mass of the black hole. This is quite a serious problem for big black holes and frankly I don’t see how they can ever radiate anything at all.

On the other hand, it’s interesting to look at fundamental particles in terms of black holes (Yang-Mills force-mediating exchange radiation may be Hawking radiation in an equilibrium).

When you calculate the force of gauge bosons emerging from an electron as a black hole (the radiating power is given by the Stefan-Boltzmann radiation law, dependent on the black hole radiating temperature which is given by Hawking’s formula), you find it correlates to the electromagnetic force, allowing quantitative predictions to be made. See https://nige.wordpress.com/2007/05/25/quantum-gravity-mechanism-and-predictions/#comment-1997 for example.

You also find that because the electron is charged negative, it doesn’t quite follow Hawking’s heuristic mechanism. Hawking, considering uncharged black holes, says that either of the fermion-antifermion pair is equally likey to fall into the black hole. However, if the black hole is charged (as it must be in the case of an electron), the black hole charge influences which particular charge in the pair of virtual particles is likely to fall into the black hole, and which is likely to escape. Consequently, you find that virtual positrons fall into the electron black hole, so an electron (as a black hole) behaves as a source of negatively charged exchange radiation. Any positive charged black hole similarly behaves as a source of positive charged exchange radiation.

These charged gauge boson radiations of electromagnetism are predicted by an SU(2) electromagnetic mechanism, see Figures 2, 3 and 4 of https://nige.wordpress.com/2007/06/20/the-mathematical-errors-in-the-standard-model-of-particle-physics/

For quantum gravity mechanism and the force strengths, particle masses, and other predictions resulting, please see https://nige.wordpress.com/about/

Update (25 July): Because I’ve been busy preparing for major exams, I didn’t read the paper Dr Woit linked to about SU(2) confinement at the time, and mentioned the news because it seemed relevant. Now I have read the relevant paper, http://arxiv.org/PS_cache/arxiv/pdf/0707/0707.2179v1.pdf, it’s nowhere as physically interesting as expected, although remarkably it does clearly describe the physical problem in quantum field theory which it addresses mathematically, on page 2:

‘The origin of the difficulty is clear. It is the multi-scale nature of the problem: passage from a short distance ordered regime, where weak coupling perturbation theory is applicable, to a long distance strongly coupled disordered regime, where confinement and other collective phenomena emerge. Systems involving such dramatic change in physical behavior over different scales are hard to treat. Hydrodynamic turbulence, involving passage from laminar to turbulent flow, is another well-known example, which, in fact, shares some striking qualitative features with the confining QCD vacuum.’

Page 3 mentions the relationship of the new approach to other symmetry groups:

‘Only the case of gauge group SU(2) is considered explicitly here. The same development, however, can be applied to other groups, and, most particularly, to SU(3) which exhibits identical behavior under the approximate decimations.’

It is unfortunately applied to bogus stringy ideas dating from the late 1960s, which will mislead students just as epicycles did for centuries; the analogy of forces to elastic bands under tension is only useful for forces that increase with distance, instead of falling with increasing distance. See page 34:

‘It is worth remarking again that in an approach based on RG [renormalization group] decimations the fact that the only parameter in the theory is a physical scale emerges in a natural way. Picking a number of decimations can be related to fixing the string tension. That this can be done only after flowing into the strong coupling regime reflects the fact that this dynamically generated scale is an ‘IR effect’. The coupling g(a) is completely determined in its dependence on a once the string tension is fixed. In particular, g(a) → as a → 0. Note that this implies that there is no physically meaningful or unambiguous way of non-perturbatively viewing the short distance regime independently of the long distance regime. Computation of all physical observable quantities in the theory must then give a multiple of the string tension or a pure number. In the absence of other interactions, this scale provides the unit of length; there are in fact no free parameters.’

The string analogy is irrelevant as stated; the fact that a force can be considered a string tension is neither here nor there (analogies abound in physics; just because an analogy exists, it does not mean that it is physically real since there could be a better [more predictive, falsifiable] analogy out there, and in fact there is). It’s interesting if the author’s claim, of getting the whole theory from merely the scale and dispensing with all other parameters, is correct. If that is the case, it makes things simpler.

It would be nice in future for real experts to check the content of new papers they report on, determining whether they are actually correct or not. Otherwise, what these people tend to do is comment on new arxiv papers without actually committing themselves to saying the paper is right or wrong. That’s a good way to be fashionable, but it’s not really that scientific. What ends up occurring, is that groupthink and consensus emerge, not scientific fact. Papers get mentioned not because the person mentioning them has really checked them and found them correct, but because they ‘look interesting’ or the author ‘has been working a long time’ on the topic, or some other scientifically-irrelevant chatter. It reminds me of the peer-review process. A new innovator of a totally radical approach to a subject doesn’t – by definition – have any ‘peers’ who are up to speed on the new idea. Peer-review even at the best of times doesn’t involve experiments being replicated and calculations checked; the peer-reviewer is more likely to endorse the paper if he or she ‘respects’ the author and finds the paper ‘interesting’. If the peer-reviewer who gets 50 manuscripts a week checked radical ideas in each paper, it would they would never take (at least) several weeks of non-stop, full-time work to carefully evaluate and check in detail all the results in each paper, and the peer-review system would become clogged and break down. So instead, trust is placed in the author. This trust is based on the author being either well known to ‘peers’ or else being affiliated with a trustable institution. (It is for this reason that peer-review in many cases is anti-science. It’s the old boys club principle; the mutual back-slapping tea party, which is very elitist and excellent at applying groupthink-based censorship criteria to heretical new developments that would adversely affect the status of the more senile members, and it’s ingenious at rewarding orthodoxy and conformity, while not caring about actual physical facts quite so much as the social side of conferences, the networking of contacts, the getting potential peer-reviewers on the ‘right side’ by explaining ideas over a few beers, bribes, corruption, etc. This sounds scientific in one sense, but it’s not what science is all about; it’s missing the whole point. This reminds one of Lord Cardigan’s charge of the light brigade in 1854, where cavalry charged against overwhelming odds and the French Marshal Pierre Bosquet commented: C’est magnifique, mais ce n’est pas la guerre. The thing is, if it had been filmed, it would have looked like war. The idea that a successful war is one where there isn’t too much carnage along the way, is just one idea or fashion about what ‘war’ is supposed to be about. Getting off topic a bit, people get used to seeing a lot of blood in war films, and if reality doesn’t match celluloid, then the army gets a ticking off for taking things too easy, and approaching the enemy too cautiously! They’re being paid to risk their lives today, whereas yesterday they were being paid to save lives and preserve liberty. That’s exactly the sort of subtle change in public perception of what people’s jobs are, that creeps up on society in politics and the media. It makes you sick. Groupthink is very fickle! There’s no science involved in all these political and social areas, where definitions are arbitrary and so can change at whim. Moving back to the analogy of science orthodoxy: getting a paper hyped up in the news is not necessarily the same thing as actually doing science, it’s actually almost irrelevant. Publication is only relevant to science if other people are actually in a position to read tha paper and switch their own ideas and research areas in that direction, if needed. If those who could help are busy socialising and riding a bandwaggon with their peer-reviewers, and that kind of thing, then nothing can possibly happen.)

Update (27 July 2007):

Physical position of electric and magnetic fields in photons and gauge bosons

Light is an example of a massless boson. There is an error in Maxwell’s model of the photon: he draws it with the variation of electric field (and magnetic field) occurring as a function of distance along the longitudinal axis, say the x axis.

Maxwell uses the z, and y axes to represent not distances but magnetic and electric field STRENGTHS.

These field strengths are drawn to vary as a function of one spatial dimension only, the propagation direction.

Hence, he has drawn a pencil of light, with zero thickness and with no indication of any transverse waving.

What you get occurring is that people look at it and think the waving E-field line is a physical outline of the photon, and that the y axis is not electric field strength, but is distance in the y-direction.

In other words, they think it is a three dimensional diagram, when in fact it is one dimensional (x-axis is the only dimension; the other two axes are field strengths varying solely as a function of distance along the x-axis).

I explained this to Catt, but he wasn’t listening, and I don’t think others listen either.

The excellent thing is that you can correct the error in Maxwell’s model to get a real transverse wave, and then you find that it doesn’t need to oscillate at all in the longitudinal direction in order to propagate!

This is because the variation in E-field strength and B-field strength actually occurs at right angles to the propagation direction (which is the opposite of what Maxwell’s picture shows when plotting these field strengths as a variation along the longitudinal axis or propagation direction of light, not the transverse direction!).

This is useful for discriminating between a longitudinally oscillating real photon, and a virtual boson which has no longitudinal oscillation, just a transverse wavelength, and can be endlessly long in the direction of propagation in order to allow smooth transfer of force by virtual boson exchange. The virtual boson doesn’t oscillate charges it encounters like a photon; it merely transfers energy and momentum p = E/c (if absorbed in the interaction without re-emission) or p = 2E/c (if absorbed and then re-emitted with opposite direction; i.e., if reflected back the way it came). This discriminates gravitons (virtual photons) from real photons. The gauge bosons of electromagnetism are distinct because they are charged; positive charged exchange radiation is possible because it is going in both directions between two protons (mediating a positive electric field in the vacuum) and so it is travelling through itself in two directions at once (see figures 2, 3 and 4 here). Similarly for negative gauge bosons being mediated between two electrons. The similar charges get knocked apart by the exchange, just as two people firing guns at one another tend to recoil apart (both from actually firing bullets and from being hit by them). For attractive forces, shielding from the inward force due to the reaction to the outward force of the big bang (accelerating mass gives an outward force by Newton’s 2nd law, which by Newton’s 3rd law is accompanied by an inward reaction force, which turns out to be carried by gauge boson radiation) creates attraction, just as in the case of the gravitation mechanism, although you need to allow for the fact that the path integral of charged gauge bosons will allow a multiplication of force across the universe because some special paths will encounter (by chance) alternating positive and negative charges (like a series of charged capacitor plates with vacuum dielectric between them) making the effective potential multiply up by a large factor, while the majority of likely paths which encounter positive and negative charges at random and so will behave like a series of charged capacitors randomly orientated in series (think about a battery back; if you put a large number of batteries into it randomly, i.e., without getting them all the same way around, on average the voltage will be be cancelled out to give zero output). Hence, only the special path works. The path integral geometry shows that the special path is a zig-zag like a drunkard’s walk between alternating positive and negative charges across the universe. This is not as efficient (for creating a net force in a line) as a straight line series of alternatively positive and negatively charged capacitor plates, but it does multiply up the force a lot. The resulting force is equal to that of gravity times 10^40.

So this mechanism makes checkable, falsifiable predictions for force strengths, particle masses, cosmological stuff (the biggest falsifiable prediction was made and published in 1996, years before the observational confirmation of that prediction, which showed that the universe is indeed not decelerating; this is actually due to a lack of gravitational mechanism at great distances because of the geometry of shielding mechanism and or you can consider the weaking of gravity due to the redshift of gravitons exchanged between receding masses over vast distances in the expanding universe; include this quantum gravity model as a correction to general relativity, and then ‘evolving dark energy’ and its small positive cosmological constant become as redundant, misleading, unnecessary, unfalsifiable, pseudoscientific, hogwash as would be the inclusion of caloric in modern heat theory).

Maxwell’s drawing of a light photon in his final 1873 3rd edition of A Treatise on Electricity and Magnetism is actually a longitudinal wave because the two variables (E and B) are varying solely as a function of propagation direction x, not as functions of transverse directions y and z which aren’t represented in the diagram (which uses y and z to represent field strengths along x, instead of directions y and z in real space).

The full description of the electromagnetic gauge boson and its relationship to a photon can be found in figures 2, 3 and 4 of:

Further update (27 July): Charge experiment: charge up anything with electricity. You can do that by sending in a light-velocity logic step: the energy flows in at light velocity. It then has no mechanism to slow down below light velocity.

Thus, static electricity is ‘composed’ in a sense of energy going at light speed in all directions, in an equilibrium of currents. (Remember that in electricity, the gauge bosons of the electromagnetic field carry the energy, and the drift of electrons carries trivial kinetic energy because the electrons on go at a snails pace and have small masses; the kinetic energy of the electrons is half their mass multiplied by the square of their net velocity.) The magnetic field curls cancel out because there is always as much energy going in direction y as in direction -y. So only electric field (the only thing about charge that is observable) is experienced as a result.

The question is why the discoverer doesn’t forcefully state this, and why he doesn’t extend it properly by considering the smallest possible unit charge, the electron (which itself must be trapped light velocity energy, and we can then do a lot). Instead, like Kepler, he bogs down his few vital laws in loads of false trivia (Kepler was also an astrologer and had planets being held in their orbits by magnetism; his books are full of pseudoscience and Newton’s chief biographer pointed out that Newton’s genius was in part being able to wade through Kepler’s vast output of nonsense and pick out the useful laws, ignoring the rest). One of the claims popularly made against that discoverer is the of dismissing ‘charge’ as a fundamental entity.

Actually, everyone who claims to have observed charge has just observed a field, say an electric field, not the core of the electron itself. So ‘charge’ has never been directly observed and there is no evidence that it is not composed of trapped field bosons. In fact, charges can be created in the vacuum within strong or extremely high frequency fields by pair production, given just gamma rays which have an energy exceeding the rest masses of the charges. Particles have spin and one known way to allow for the known quantum numbers such as spin properties consistently is to get such a model of a particle as a ‘loop’ or ‘string’ (not M-theory superstring which has extra spatial dimensions) to create charge as a permanent field by trapping radiation in a small loop or similar, that would then be indistinguishable from ‘charge’. Scientifically, the word ‘charge’ is only defensible so far as it has been observed. The electric charge has not been directly observed (the electric field is observed) since it exists on a scale so small it is unobservable (Planck scale or black hole scale, which is still smaller), so people observe the electric field and decide whether that implies a charge or not. There are electric fields in radio and other waves. Therefore, when you observe such a field, it does not automatically prove what the cause of the field is. When you name ‘charge’ you don’t know, directly, anything about what it is that you are naming. The word charge is vague: it is used to denote any appearance of an electric field associated with apparently static electricity, but there is no reason why a ‘charge’ shouldn’t just be a trapped non-static field. On the contrary, there is plenty of evidence for it; it unifies matter and radiation.

Update (16 August 2007):

ENERGY DENSITY IN ELECTRIC, GRAVITATIONAL, & NUCLEAR FORCE FIELDS

Here’s a demonstration of how to calculate the energy density of various fields for working out how energy is conserved when short range nuclear forces are created from electromagnetic force which is shielded by the polarized particles of the disrupted fabric of the vacuum at very high energy (very close to a particle).

Energy density in an electric field is easy to calculate in electromagnetism because you can charge up a capacitor to a constant potential or voltage v (two parallel flat metal plates with an “x” metre gap such as vacuum between then, the vacuum being called the dielectric of free space) and there is then a constant electric field of v/x volts/metre between the plates. Knowing how much electrical energy you put into the capacitor to charge it up, allows you to relate the electric field strength v/x to the energy per unit volume in the field (i.e., the energy used to charge the capacitor, divided by the product of the gap between the plates “x” and the area of the plates).

Coulomb’s law for electric charges q and Q is:

F = qQ/(4*Pi*permittivity*r^2)

the strength of an electric field v/x (I’m not using E for electric field here or it will be too confusing; I’m using E only for energy) from charge Q is given by

F = (v/x)q

Hence

Electric field strength, v/x = F/q

= Q/(4*Pi*permittivity*r^2).

Now from the analysis of a capacitor, the energy density of an electric field is

E/V = 0.5*[permittivity]*(v/x)^2

where V here is unit volume and has nothing to do with voltage v (reference: see http://hyperphysics.phy-astr.gsu.edu/hbase/electric/engfie.html )

So the energy density of electric fields is (substituting the previous expression for electric field strength v/x around charge Q into the last formula):

E/V = 0.5*[permittivity]*[Q/(4*Pi*permittivity*r^2)]^2

= (1/32)*Q/[(Pi^2)*permittivity*r^4]

Hence, the energy density of a field varies as the 1/r^4.

Now, we have the energy density of an electric field – which derives from the Coulomb force which is an inverse-square law rather Newton’s in some respects, can we use the analogy between Newton’s law and Coulomb’s law to derive the energy density of a gravitational field?

If we assume for quarks or electrons or whatever that Newton’s law is just something like 10^40 times weaker than Coulomb’s electric force law, then presumably the energy density of the gravitational field will be simply the value we calculated from Coulomb’s law, divided by 10^40:

E/V ~ (1/32)*Q/[(10^40)*(Pi^2)*permittivity*r^4]

This equation allows you to calculate approximately the energy density of the field around a unit mass like a fermion. It’s clear that the energy density varies very rapidly with distance from the middle. This is why I don’t see how you are going to get a constant energy density for space from an inverse square law: the Joules of field energy per cubic metre fall off rapidly with increasing distance. You would have to find a way to average the energy density by integrating the total energy as a function of radius.

Notice that the classical electron radius is based on this approach for the energy density of Coulomb’s law. You integrate the energy density over space from a small inner radius out to infinite radius, and you set the result equal to the known electron rest-mass energy E = mc^2 where m is electron mass. The maths then tells you the value of the inner radius you need to start the calculation (if you took the inner radius to be zero, you would get a wrong answer, infinity). The calculated inner radius is 2.818 fm, see http://en.wikipedia.org/wiki/Classical_electron_radius

In previous posts’ comment sections, it is pointed out that within such a radius the vacuum energy is disorganised with pair production spontaneously creating short-lived pairs of particles which then annihilate in collisions (with average time scales as indicated by Heisenberg’s statistical impact uncertainty formula). Because this energy at short ranges is so disorganised, it has high entropy and cannot be extracted, so it’s not useful energy. Because it can’t be extracted, that short ranged chaotic field energy is not included in the equation E=mc^2. The discrepancy between the classical electron radius and the known shorter ranged physics in quantum field theory is due to the assumption that the releasable energy E=mc^2 is the total energy, when it is in fact just that portion of the total energy which is sufficiently organised that it can be converted into gamma rays or whatever when matter and antimatter annihilate; the rest of the energy remains unobservable as gauge bosons with high entropy, going in all directions at once between all charges.

Such disorganised energy doesn’t contribute to organised energy any more than you can extract the energy of air molecules hitting you continually. Air molecules at room temperature and pressure have a mean speed of 500 m/s, so the 1.2 kg of air in just one cubic metre at sea level contains air with kinetic energy of 0.5*1.2*500^2 = 150,000 Joules. But this energy is almost totally useless to you because it is disorganised. It isn’t useful energy, because you can’t use it to do work. You can’t use the energy of air molecules to power your laptop or light. It will mix gases slowly (by diffusion), but that’s about it. This is why “energy” is such an abused term in the media. Just because you technically have an immense amount of energy, all energy is not the same thing and what matters in practice is how easy it is to extract it. (The ocean contains 9 million tons of gold, so if you believe that extracting something is always economical then you can just go down to the seaside with your distillation set and get rich: dissolved in ocean water you’ll have access to 180 times the total amount of gold ever mined on land throughout the whole of history. Obviously, this is useless advice since 3.5% of sea water is a mixture of salts, and you would have to separate the gold from the salts. It’s just more expensive to get gold that way than to go into a shop and buy gold! Germany did extensive research on this after WWI, when its leading chemists seriously considered the extraction of gold from sea water as a means to pay war reparations to France. When the efforts to accomplish it failed, hyperinflation resulted and the dissent led to fascism and WWII.)

The crackpots who write fancyful things promising the use of “zero-point energy” to cure disease and power spaceships, before they even have a clue as to how much such energy there is or whether it can be extracted, are really anti-science: because they are just trying to impose a religious belief system ahead of the facts. (It’s a situation like the charlatan string theorists who celebrate and hype their “discovery” of quantum gravity in lucrative articles and books before they have even identified a speculative theory from their landscape of 10^500 variants.)

Update (17 August 2007):

I should obviously work on the quantification of force unification indicated by Fig. 1 above. My idea is to calculate quantitatively the way the electric charge increases in apparent strength above the IR cutoff (collision energy of 0.5 MeV per electron), due to electrons approaching closely and seeing less polarized vacuum shielding the core charge (see Figure 26.10 of the paperback edition of Penrose’s book, Road to Reality). The significance of the IR cutoff energy is that it corresponds to the outer radius at which the electric field strength of a fermion is strong enough to cause pair-production the the vacuum. This threshold electric field strength required for pair production was first calculated by Julian Schwinger and his formula can be found as equation 359 in Freeman Dyson’s lecture notes on Advanced Quantum Mechanics http://arxiv.org/abs/quant-ph/0608140 and as equation 8.20 in Luis Alvarez-Gaume and Miguel A. Vazquez-Mozo, Introductory Lectures on Quantum Field Theory, http://arxiv.org/abs/hep-th/0510040.

It is easy to translate the IR or UV cutoff energy into the actual distance from a fermion of unit charge, at least approximately. For example, if you assume Coulomb scattering occurs, two electrons of IR cutoff energy (0.511 Mev each) will approach each other until the potential energy of Coulomb repulsion has negated all of their kinetic energy and their velocities have dropped to zero. At that time, they are at the distance of closest approach, and thereafter will begin accelerating apart. (Obviously such a simple calculation ignores inelastic scatter effects like the release of x-ray radiation accompanying deceleration of charge.) From this comment:

‘The kinetic energy is converted into electrostatic potential energy as the particles are slowed by the electric field. Eventually, the particles stop approaching (just before they rebound) and at that instant the entire kinetic energy has been converted into electrostatic potential energy of E = (charge^2)/(4*Pi*Permittivity*R), where R is the distance of closest approach.

‘This concept enables you to relate the energy of the particle collisions to the distance they are approaching. For E = 1 MeV, R = 1.44 x 10^-15 m (this assumes one moving electron of 1 MeV hits a non-moving electron, or that two 0.5 MeV electrons collide head-on).

‘But just thinking in terms of distance from a particle, you see unification very differently to the usual picture. For example, experiments in 1997 (published by Levine et al. in PRL v.78, 1997, no.3, p.424) showed that the observable electric charge is 7% higher at 92 GeV than at low energies like 0.5 MeV. Allowing for the increased charge due to reduced polarization caused shielding, the 92 GeV electrons approach within 1.8 x 10^-20 m. (Assuming purely Coulomb scatter.)

‘Extending this to the assumed unification energy of 10^16 GeV, the distance of approach is down to 1.6 x 10^-34 m, and the Planck scale is ten times smaller.

‘If you replot graphs like http://www.aip.org/png/html/keith.htm (or Fig 66 of Lisa Randall’s Warped Passages) as force strength versus distance form particle core, you have to treat leptons and quarks differently.

‘You know that vacuum polarization is shielding the core particle’s electric charge, so that electromagnetic interaction strength rises as you approach unification energy, while strong nuclear forces fall.

‘Considering what happens to the electromagnetic field energy that is shielded by vacuum polarization, is it simply converted into the short ranged weak and strong nuclear forces? Problem: leptons don’t undergo strong nuclear interactions, whereas quarks do. The answer to this is that quarks are so close together in hadrons that they share the same vacuum polarization shield, which is therefore stronger than in leptons, creating vacuum energies that allow QCD. If you consider 3 electron charges very close together so that they all share the same polarized vacuum zone, the polarized vacuum will be 3 times stronger, so the shielded charge of each seen from a great distance may be 1/3 of the electron’s charge (a downquark).’ (Obviously, weak isospin charge and weak hypercharge make things more complex, masking this simple mechanism in general, see for example my post here for details, as well as other recent posts on this blog, i.e., last 6-7 posts.)

Carl Brannen and Tony Smith have kindly made some interesting comments about the possibility of preons in quarks which seem to me to explain vital aspects of the SU(3) colour charge (strong force) which is discussed on Kea’s blog Arcadian Functor here. Anyway, my idea is to use the logarithmic correction law for energy-dependence on charge between IR and UV cutoffs, such as equation 7.13 or 7.17 (the summation in that equation is for the fact that at higher energies you get pair production of heavier charges contributing, i.e., above 0.511 MeV/particle you get electron and positron pair production, above 105 MeV/particle you get also muon and anti-muon pair production, and you get pairs of hadrons as well as leptons at the higher energies), on pages 70-71 of http://arxiv.org/PS_cache/hep-th/pdf/0510/0510040v2.pdf. (Notice the footnote on page 71 thanking Lubos Motl, the result of an email he sent the authors after I asked Lubos on his blog why the calculation for the electric charge of an electron is wrong according to the earlier version of that paper: see lying equation 7.17 on page 70 of the original version of the paper which is still also held on arxiv as http://arxiv.org/PS_cache/hep-th/pdf/0510/0510040v1.pdf. This original version of the equation falsely claims that the electron’s charge increases from the relative value of 1/137 at low energies (i.e., all energies below and up to the IR cutoff of 0.511 MeV) to 1/128 at an energy of 92 GeV just as a result of electron and positron pair production. But when you put the numbers into that original version of the equation, you get the wrong answer. This puzzled me, because I’m used to textbook calculations being checked and workable. Clearly the authors hadn’t actually put the numbers in and done the calculation! So it turned out, because between 0.511 MeV and 92 GeV there are loads of other vacuum creation-annihilation loops of leptons and hadrons other than merely electron and positron pair production. The total effect is that at all distances beyond the IR cutoff, i.e., a radius of 1.44 fm, the electron’s charge is the normal value in the textbook, e = 1.60*10^-19 Coulombs (which is 1/137 in dimensionless quantum field theory charge units, see this post for a discussion of why). As you go to an energy of 92 GeV i.e., as you get approximately 92,000/0.511 or 180,000 times closer to the electron than you are at 1.44 fm, you find that the electron’s charge apparently increases by 7% to 1.71*10^-19 Coulomb (or 1/128 in QFT dimensionless charge units). This 7% increase was experimentally verified as shown by Levine, Koltick, et al., in a good paper published PRL in 1997. The physical reason for this “increase” is that as you get closer to the electron core, there is less shielding (i.e. less polarized pair production particles) in the space between you are the core of the electron. It’s like climbing above the clouds in an aircraft: the sunlight doesn’t increase because you are closer to the sun, but because there is less condensed water vapour between you and the sun. For an electron, the cloud of polarised pair production charges extends out to the IR cutoff or something like a radius of 1.44 fm. However, this exact number is controversial because it differs somewhat from the radius corresponding to Schwinger’s threshold electric field strength for pair production, which is 1.3*10^18 volts/metre, and this field strength occurs out to a radius of r = [e/(2m)]*[(h-bar)/(Pi*Permittivity*c^3)]^{1/2} = 3.2953 * 10^{-14} metre = 32.953 fm from the middle of an electron. This radius also differs from the classical electron radius of 2.818 fm already discussed, so there are some issues over the precise value of the IR cutoff you should take; should it correspond to a distance from an electron of 1.44 fm, 32.95 fm, or 2.82 fm? Renormalization does not answer this question, because the physics is not sensitive to the precise energy of the IR cutoff when calculating the magnetic moment of leptons or the Lamb shift (some of the few things that can be accurately calculated from QFT). There are two cutoffs, one at low energy (hence “IR” meaning infrared, which is the name given to the cutoff in visible light at the low energy end of the visible spectrum) and one at high energy (hence “UV” meaning ultraviolet, which is the cutoff in visible light at the high energy end of the visible spectrum). The UV cutoff seems to occur simply because once you get within a certain extremely small distance of the core of a charge, there is physically not enough room for pair production and polarization of those charges to occur in that tiny space between you and the core, so there is no way physically that the mathematical logarithmic equation for the running coupling can continue to apply.